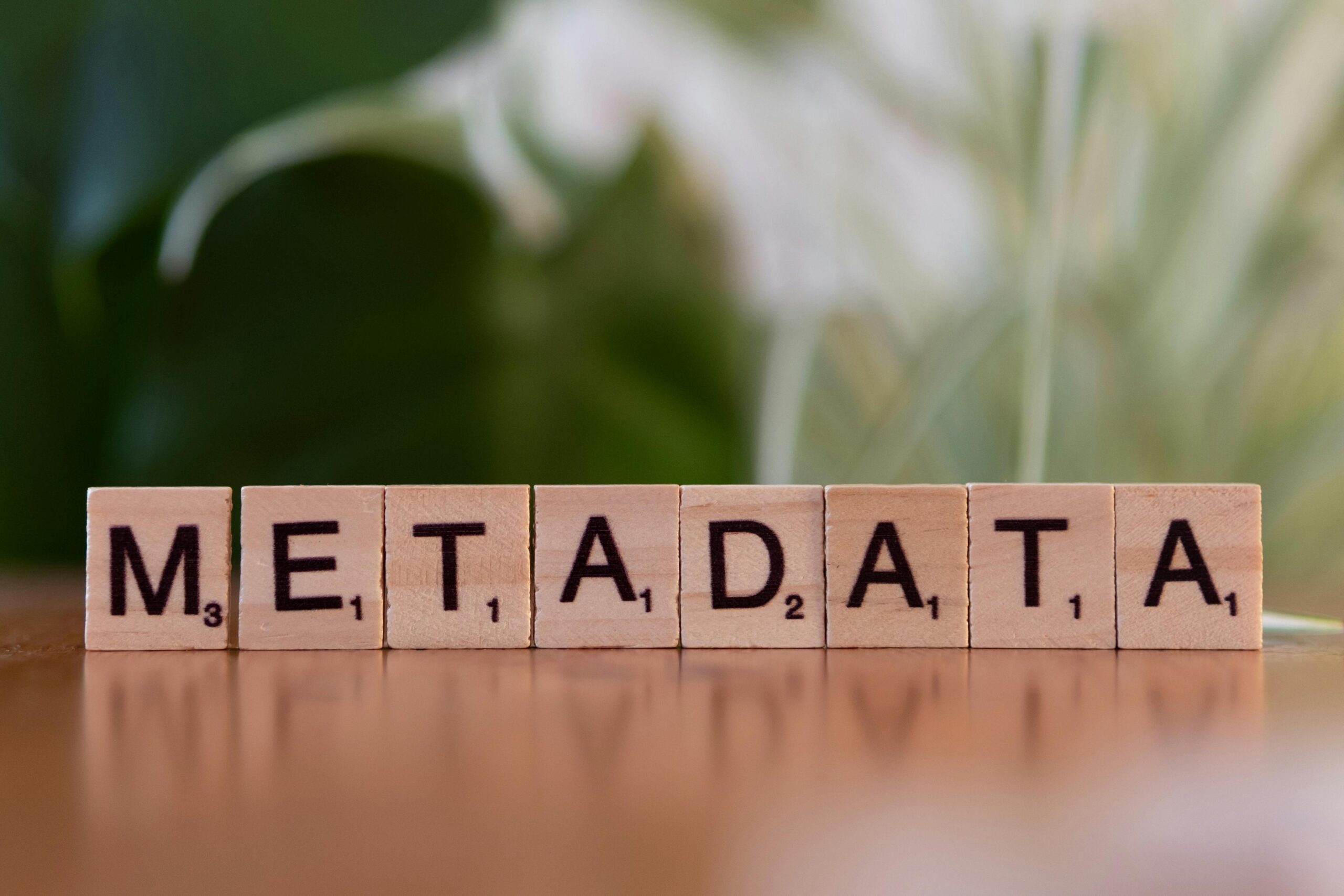

Metadata serves as the backbone of modern data management, transforming raw information into organized, discoverable, and sustainable digital assets that stand the test of time.

🎯 Why Metadata Matters More Than Ever

In an era where organizations generate terabytes of data daily, the ability to find, understand, and utilize information efficiently has become a competitive necessity. Metadata—literally “data about data”—provides the context, structure, and descriptive framework that makes datasets meaningful beyond their initial creation.

Without proper metadata management, datasets become orphaned files that lose value over time. Research conducted by Forrester indicates that employees spend nearly 2.5 hours daily searching for information, with much of this inefficiency stemming from poor data documentation and organization. The cost of this lost productivity reaches billions annually across industries.

Effective metadata practices enable organizations to preserve institutional knowledge, facilitate collaboration across teams, ensure regulatory compliance, and maximize return on data investments. As datasets grow larger and more complex, metadata becomes the map that guides users through increasingly intricate information landscapes.

📋 Understanding the Metadata Ecosystem

Metadata exists in multiple forms, each serving distinct purposes within the data management lifecycle. Recognizing these categories helps organizations implement comprehensive metadata strategies that address various user needs and operational requirements.

Descriptive Metadata: Making Data Discoverable

Descriptive metadata answers fundamental questions about dataset identity and content. This category includes titles, authors, abstracts, keywords, and subject classifications that enable users to locate relevant information through search and browsing mechanisms.

Strong descriptive metadata incorporates controlled vocabularies and standardized taxonomies that create consistency across datasets. When properly implemented, these elements transform data repositories into searchable libraries where users can efficiently identify resources matching their specific requirements.

Structural Metadata: Defining Relationships and Organization

Structural metadata describes how data components relate to one another and how complex datasets are organized internally. This includes information about file formats, database schemas, hierarchical relationships, and the sequencing of multi-part resources.

For databases, structural metadata documents table relationships, primary keys, foreign keys, and indexing strategies. For file-based datasets, it captures directory structures, naming conventions, and dependencies between related files. This organizational intelligence prevents data fragmentation and supports long-term maintainability.

Administrative Metadata: Tracking Lifecycle and Governance

Administrative metadata captures information essential for managing datasets throughout their lifecycle. This category encompasses technical details about file creation, modification dates, access permissions, preservation actions, rights management, and provenance information.

Subcategories within administrative metadata include preservation metadata (information supporting long-term storage and migration), rights metadata (intellectual property and usage restrictions), and technical metadata (specifications about file types, compression, and hardware dependencies).

🔧 Building a Robust Metadata Framework

Successful metadata implementation requires strategic planning that balances comprehensiveness with practicality. Organizations must establish frameworks that capture essential information without creating unsustainable documentation burdens.

Selecting Appropriate Metadata Standards

Metadata standards provide pre-defined structures that promote interoperability and consistency. Numerous domain-specific and general-purpose standards exist, each optimized for particular use cases and communities.

Dublin Core offers a simple, widely-adopted standard with 15 core elements suitable for describing diverse digital resources. DataCite provides specialized metadata schemas for research datasets, supporting proper citation and academic discovery. The DDI (Data Documentation Initiative) serves social, behavioral, and economic sciences with detailed variable-level documentation.

Industry-specific standards address specialized requirements—FGDC and ISO 19115 for geospatial data, PREMIS for digital preservation, EML for ecological research, and DICOM for medical imaging. Selecting standards aligned with your domain ensures compatibility with relevant repositories and tools while meeting community expectations.

Designing Custom Metadata Schemas

While standards provide excellent foundations, most organizations need customized schemas that address unique requirements. Effective schema design begins with stakeholder consultation to identify information needs across data creators, managers, and consumers.

Custom schemas should balance specificity and flexibility, capturing essential details without excessive complexity. Consider implementing tiered metadata approaches where basic elements are mandatory for all datasets, while specialized fields remain optional or apply only to specific data types.

Documentation of your metadata schema itself—a practice called metadata schema documentation—ensures consistency in interpretation and application across teams and over time. This meta-documentation should clarify field definitions, provide usage examples, and specify controlled vocabularies or validation rules.

⚙️ Implementing Metadata in Practice

Translating metadata principles into operational reality requires appropriate tools, clear workflows, and organizational commitment to data documentation as a core professional responsibility.

Leveraging Metadata Management Tools

Specialized software platforms simplify metadata creation, storage, and maintenance while enforcing consistency and standards compliance. Data catalogs like Apache Atlas, Alation, and Collibra provide enterprise-grade solutions with automated metadata harvesting, lineage tracking, and collaborative governance features.

Open-source alternatives including CKAN, Dataverse, and DSpace offer robust capabilities for organizations with technical resources to manage self-hosted implementations. These platforms support metadata standards, provide APIs for programmatic access, and integrate with research workflows.

For file-based datasets, embedded metadata within files themselves (using formats like HDF5, NetCDF, or GeoTIFF) ensures documentation travels with data. Sidecar files—separate metadata documents accompanying datasets—provide flexible alternatives when embedded options aren’t feasible.

Automating Metadata Capture

Manual metadata entry creates bottlenecks and introduces inconsistencies. Automation strategies reduce documentation burden while improving metadata quality and completeness.

Technical metadata often can be extracted automatically from files and systems—creation dates, file sizes, formats, checksums, and system-level properties require no human intervention. Programmatic extraction tools can derive statistical summaries, variable lists, and structural information from datasets.

Machine learning approaches increasingly support metadata generation, with natural language processing extracting keywords and topics from text documents, and computer vision generating descriptive tags for images. While these automated approaches require human review, they dramatically accelerate metadata creation workflows.

📊 Metadata Quality and Maintenance

Creating metadata represents only the beginning; maintaining quality over time requires ongoing attention and systematic approaches to validation and updating.

Establishing Quality Criteria

High-quality metadata exhibits completeness, accuracy, consistency, timeliness, and accessibility. Completeness ensures all required fields contain values; accuracy verifies information correctly describes datasets; consistency maintains uniform approaches across similar resources.

Timeliness keeps metadata current as datasets evolve, while accessibility ensures metadata remains discoverable and understandable to intended audiences. Regular metadata audits assess these dimensions and identify improvement opportunities.

Validation and Quality Control

Automated validation catches common metadata errors before they compromise data discovery and use. Schema validation confirms metadata records conform to structural requirements, while controlled vocabulary validation ensures terms match approved taxonomies.

Referential integrity checks verify links between related records remain valid. Completeness analysis identifies missing required fields or suspiciously sparse documentation. Regular validation reports help metadata stewards prioritize quality improvement efforts.

Versioning and Change Management

As datasets evolve through updates and corrections, metadata must track these changes while preserving historical context. Versioning strategies document what changed, when, and why—information crucial for reproducibility and proper data interpretation.

Some metadata elements should track versions explicitly (modification dates, version numbers, change logs), while others describe datasets at specific points in time. Clear policies about when to update existing metadata versus creating new version records prevent confusion and support proper dataset citation.

🔐 Metadata for Data Governance and Compliance

Metadata plays critical roles in regulatory compliance, privacy protection, and organizational governance by documenting data lineage, access controls, and retention requirements.

Supporting Regulatory Requirements

Regulations like GDPR, HIPAA, and industry-specific mandates impose documentation requirements that metadata helps satisfy. Data lineage metadata traces information flows from collection through processing to dissemination, demonstrating compliance with usage restrictions and consent limitations.

Retention metadata documents how long datasets must be preserved and when destruction should occur. Sensitivity classifications and access restrictions embedded in metadata support privacy protection by controlling who can view or use particular information.

Enabling Data Lineage and Provenance

Understanding data origins, transformations, and dependencies proves essential for trust, reproducibility, and impact assessment. Provenance metadata documents the complete history of dataset creation and modification, including source materials, processing steps, and responsible parties.

Lineage information supports quality assessment by revealing data dependencies and transformation logic. When datasets feed downstream analyses or decision processes, lineage metadata enables impact analysis—identifying all affected resources when source data changes or proves flawed.

🌐 Metadata for Interoperability and FAIR Principles

The FAIR principles—Findable, Accessible, Interoperable, and Reusable—provide internationally recognized guidelines for scientific data management, with metadata serving as the foundation for all four dimensions.

Making Data Findable

Findability requires persistent identifiers (like DOIs), rich descriptive metadata, and registration in searchable resources. Metadata must be indexed by discovery services and include sufficient detail for users to assess dataset relevance without accessing data itself.

Keywords, subject classifications, geographic coverage, and temporal scope help users locate pertinent datasets. Author information and organizational affiliations connect datasets with responsible parties who can answer questions or provide context.

Ensuring Interoperability

Interoperable metadata uses standardized vocabularies and formats that enable both human and machine processing across systems. Formal ontologies and linked data approaches create semantic relationships between concepts, supporting sophisticated discovery and integration.

APIs exposing metadata through standardized protocols (like OAI-PMH or SPARQL) enable automated harvesting and aggregation across repositories. Machine-readable formats (JSON-LD, XML, RDF) facilitate programmatic metadata processing.

Promoting Reusability

Reusable datasets include detailed provenance, clear usage licenses, and sufficient contextual information for proper interpretation. Metadata should document data collection methodologies, quality assurance procedures, known limitations, and recommended applications.

Variable-level metadata—detailed descriptions of individual data fields including units, coding schemes, and allowed values—proves essential for correct dataset interpretation and reuse in new contexts.

💡 Organizational Strategies for Metadata Success

Technical solutions alone cannot ensure metadata success; organizational culture, policies, and incentives shape whether metadata practices become sustainable or fade as unfunded mandates.

Developing Metadata Policies and Guidelines

Formal policies establish metadata as an institutional priority with defined responsibilities, standards, and quality expectations. Effective policies clarify who creates metadata, when documentation occurs, what standards apply, and how quality is assessed.

Practical guidelines translate policies into actionable instructions with templates, examples, and decision trees addressing common scenarios. Training programs build metadata literacy across roles, from data creators to archivists to end users.

Creating Incentives and Recognition

High-quality metadata requires time and expertise that often go unrecognized in performance evaluations and career advancement. Organizations fostering metadata excellence make documentation a valued professional contribution rather than administrative burden.

Recognition programs highlighting exemplary metadata practices encourage broader adoption. Integration of metadata quality into performance metrics signals organizational commitment. Providing sufficient time and resources for documentation work prevents metadata from becoming an unfunded mandate.

Building Metadata Communities of Practice

Communities of practice bring together individuals across departments and roles to share metadata expertise, solve common challenges, and develop institutional knowledge. These communities maintain living documentation, evaluate new tools and standards, and advocate for metadata needs in technology decisions.

Regular forums for metadata discussion keep practices evolving with changing needs and emerging best practices. Cross-training initiatives build metadata capabilities throughout organizations rather than concentrating expertise in isolated silos.

🚀 Future-Proofing Through Metadata Excellence

Metadata investments made today determine whether tomorrow’s researchers and analysts can understand and utilize the datasets we create. As data volumes explode and analytical techniques advance, the metadata gap—the growing disparity between data creation and documentation—threatens to leave valuable information effectively inaccessible.

Organizations embracing metadata as strategic infrastructure rather than administrative overhead position themselves to maximize data value across time. Datasets with rich, standardized metadata integrate seamlessly into new analytical environments, support emerging use cases never imagined during initial creation, and retain meaning decades after original creators move on.

The most sustainable approach treats metadata creation as integral to data production workflows rather than separate documentation tasks. When metadata generation becomes automated wherever possible and manual documentation occurs concurrently with data creation, quality improves while burden decreases.

As artificial intelligence and machine learning technologies mature, metadata takes on additional importance in training data documentation, model transparency, and algorithmic accountability. Datasets lacking proper metadata cannot support the rigorous documentation standards emerging for responsible AI development.

Investing in metadata infrastructure, skills, and culture pays dividends through improved data discovery, reduced duplication, enhanced collaboration, and datasets that remain valuable assets rather than becoming digital landfill. In the data-driven future, organizations with metadata mastery will thrive while those neglecting documentation struggle to extract value from their information resources.

The path to metadata excellence begins with recognizing that every dataset deserves documentation worthy of its potential impact. By implementing robust metadata practices today, we ensure the longevity and continued utility of the datasets shaping tomorrow’s discoveries and decisions. 📚

Toni Santos is a meteorological researcher and atmospheric data specialist focusing on the study of airflow dynamics, citizen-based weather observation, and the computational models that decode cloud behavior. Through an interdisciplinary and sensor-focused lens, Toni investigates how humanity has captured wind patterns, atmospheric moisture, and climate signals — across landscapes, technologies, and distributed networks. His work is grounded in a fascination with atmosphere not only as phenomenon, but as carrier of environmental information. From airflow pattern capture systems to cloud modeling and distributed sensor networks, Toni uncovers the observational and analytical tools through which communities preserve their relationship with the atmospheric unknown. With a background in weather instrumentation and atmospheric data history, Toni blends sensor analysis with field research to reveal how weather data is used to shape prediction, transmit climate patterns, and encode environmental knowledge. As the creative mind behind dralvynas, Toni curates illustrated atmospheric datasets, speculative airflow studies, and interpretive cloud models that revive the deep methodological ties between weather observation, citizen technology, and data-driven science. His work is a tribute to: The evolving methods of Airflow Pattern Capture Technology The distributed power of Citizen Weather Technology and Networks The predictive modeling of Cloud Interpretation Systems The interconnected infrastructure of Data Logging Networks and Sensors Whether you're a weather historian, atmospheric researcher, or curious observer of environmental data wisdom, Toni invites you to explore the hidden layers of climate knowledge — one sensor, one airflow, one cloud pattern at a time.