In today’s data-driven world, building a resilient sensor-to-cloud logging pipeline is essential for organizations seeking to harness real-time insights and maintain competitive advantage.

🚀 The Foundation of Modern Data Infrastructure

The digital transformation era has ushered in an unprecedented explosion of sensor-generated data. From IoT devices monitoring industrial equipment to smart home sensors tracking environmental conditions, the volume and velocity of data generated at the edge continues to grow exponentially. Organizations that successfully capture, transmit, and analyze this data gain invaluable insights that drive operational efficiency, predictive maintenance, and strategic decision-making.

However, the journey from sensor to cloud is fraught with challenges. Network interruptions, hardware failures, data loss, and scalability issues can disrupt even the most carefully designed systems. This is where resilience becomes not just a desirable feature, but a fundamental requirement. A robust logging pipeline must gracefully handle failures, recover from disruptions, and ensure data integrity across the entire collection chain.

Building such a pipeline requires careful consideration of architecture, technology selection, and operational practices. The goal is to create a system that not only functions optimally under ideal conditions but continues to perform reliably when facing the inevitable challenges of real-world deployment.

Understanding the Anatomy of a Sensor-to-Cloud Pipeline 📊

Before diving into resilience strategies, it’s crucial to understand the key components that constitute a modern logging pipeline. Each layer plays a distinct role in the data collection journey and presents unique resilience challenges.

Edge Layer: The First Line of Defense

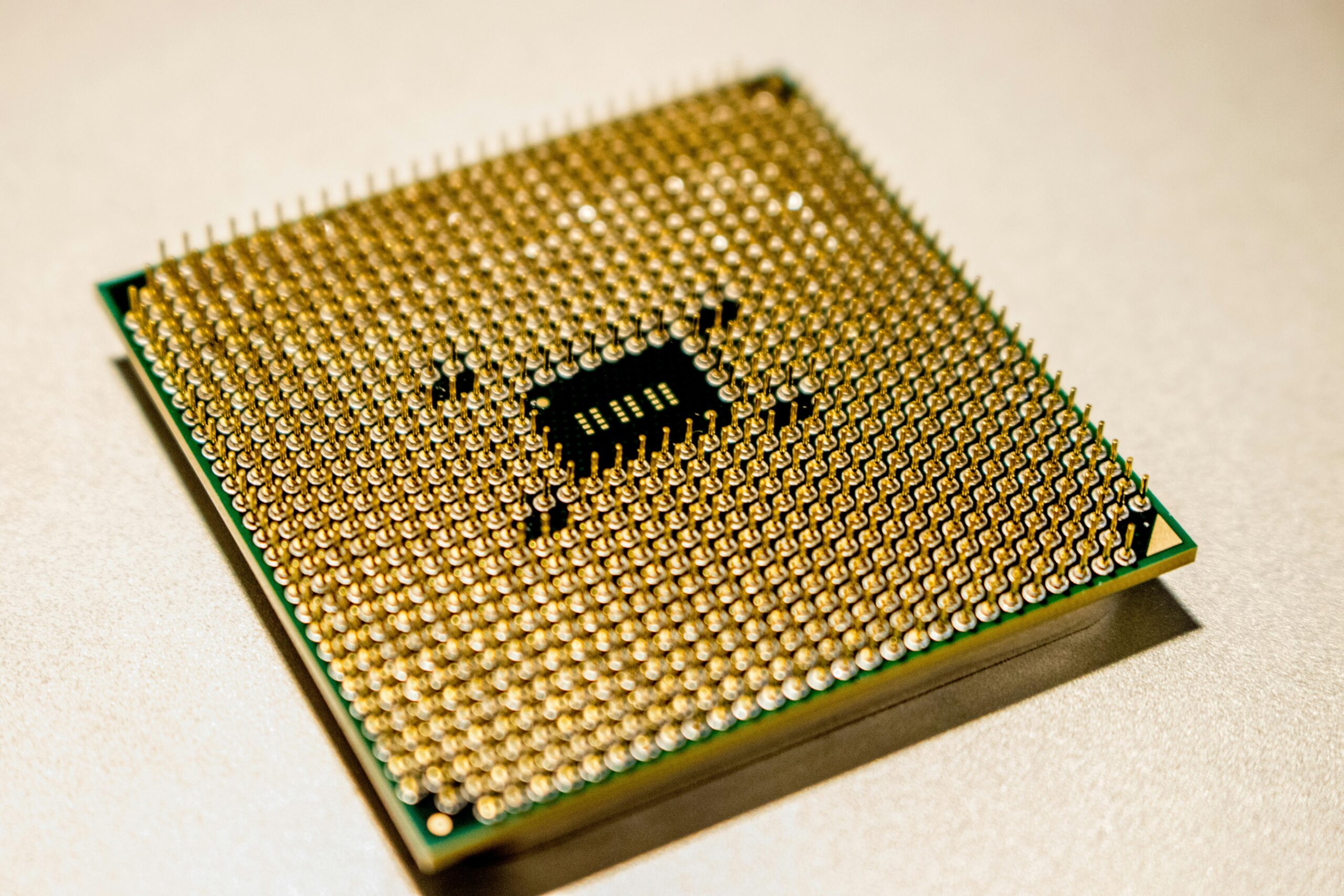

The edge layer comprises the sensors and devices that generate raw data. This layer operates in the most unpredictable environment, often in remote locations with limited connectivity and power resources. Sensors may range from simple temperature monitors to sophisticated cameras and LiDAR systems, each producing data at different rates and formats.

At this level, resilience begins with local buffering and intelligent data management. Edge devices must be capable of temporarily storing data when connectivity is lost and intelligently prioritizing which data to transmit first when connection is restored. Implementing local preprocessing can also reduce bandwidth requirements and improve overall system efficiency.

Gateway Layer: The Intelligent Intermediary

Gateway devices serve as aggregation points, collecting data from multiple sensors and managing the connection to cloud services. These devices typically have more computational power and storage capacity than individual sensors, making them ideal for implementing advanced resilience features such as data validation, protocol translation, and local analytics.

A well-designed gateway layer can significantly enhance pipeline resilience by implementing retry logic, connection management, and failover mechanisms. Gateways can also perform data compression and batching to optimize bandwidth usage and reduce transmission costs.

Transport Layer: The Critical Connection

The transport layer handles the actual transmission of data from edge to cloud. This is where network reliability becomes paramount. Choosing the right communication protocols—whether MQTT, HTTP/HTTPS, CoAP, or proprietary solutions—can make the difference between a fragile and robust system.

Modern transport layers implement features like Quality of Service (QoS) levels, message acknowledgment, and automatic reconnection. These capabilities ensure that data reaches its destination even in the face of intermittent connectivity or network congestion.

Ingestion Layer: The Cloud Gateway

Once data reaches the cloud, the ingestion layer receives, validates, and routes it to appropriate storage and processing systems. This layer must handle high-velocity data streams while maintaining data integrity and providing back-pressure mechanisms when downstream systems become overwhelmed.

Cloud-based ingestion services like AWS IoT Core, Azure IoT Hub, and Google Cloud IoT Core provide managed solutions with built-in resilience features. However, understanding their limitations and implementing additional safeguards remains essential for mission-critical applications.

⚡ Implementing Resilience at Every Level

Building resilience into your logging pipeline requires a multi-layered approach. Each component must contribute to the overall system reliability while maintaining operational efficiency.

Local Buffering and Persistence

One of the most effective resilience strategies is implementing robust local buffering at both the edge and gateway layers. When connectivity fails or downstream systems become unavailable, local storage ensures that data is not lost. The key considerations include:

- Storage capacity planning based on expected outage durations and data generation rates

- Efficient data structures that minimize storage overhead while maintaining quick access

- Intelligent buffer management policies that handle overflow scenarios gracefully

- Persistent storage mechanisms that survive device reboots and power failures

- Data aging and prioritization strategies to preserve the most critical information

Implementing circular buffers with overflow protection ensures that systems continue to capture new data even when storage approaches capacity. Critical events can be tagged with higher priority to prevent their deletion during buffer management operations.

Retry Logic and Exponential Backoff

Transient failures are inevitable in distributed systems. Implementing intelligent retry mechanisms prevents temporary issues from causing permanent data loss. Exponential backoff strategies help prevent overwhelming recovering systems while ensuring persistent reconnection attempts.

The retry logic should include configurable maximum attempt counts, timeout values, and backoff multipliers. Different types of failures may warrant different retry strategies—a network timeout might trigger immediate retry attempts, while authentication failures should use longer intervals to avoid account lockouts.

Data Validation and Quality Assurance 🔍

Resilience isn’t just about preventing data loss; it’s also about ensuring data quality. Implementing validation checks at multiple pipeline stages helps identify and handle corrupted or anomalous data before it contaminates downstream analytics.

Validation strategies include schema verification, range checking, timestamp validation, and anomaly detection. When invalid data is detected, the system should log the issue, quarantine the problematic data, and continue processing valid messages.

Architectural Patterns for Maximum Resilience

Choosing the right architectural patterns significantly impacts pipeline resilience. Several proven approaches can enhance system reliability and fault tolerance.

The Lambda Architecture Approach

Lambda architecture combines batch and stream processing to provide both real-time insights and historical accuracy. In this pattern, data flows through both a speed layer for immediate processing and a batch layer for comprehensive analysis. If the speed layer experiences issues, the batch layer ensures that data is eventually processed correctly.

This approach provides excellent resilience by maintaining multiple processing paths. However, it introduces complexity in maintaining two separate processing pipelines and reconciling their outputs.

Event Sourcing and Event Streaming

Event sourcing treats all state changes as a sequence of events stored in an append-only log. This pattern naturally provides resilience by maintaining a complete history of all data received. Systems can be rebuilt from the event log if failures occur, and multiple consumers can process events independently.

Modern streaming platforms like Apache Kafka and Amazon Kinesis implement this pattern effectively, providing durable storage, replay capabilities, and exactly-once semantics that enhance pipeline resilience.

Circuit Breaker Pattern

The circuit breaker pattern prevents cascading failures by detecting when downstream services are unhealthy and temporarily stopping requests to them. This allows failing services time to recover without being overwhelmed by continued traffic.

Implementing circuit breakers at gateway and ingestion layers protects the entire pipeline from localized failures. The pattern includes three states: closed (normal operation), open (blocking requests), and half-open (testing recovery), with configurable thresholds for state transitions.

🛠️ Technology Stack Considerations

Selecting the right technologies is crucial for building a resilient logging pipeline. The choices should balance functionality, reliability, scalability, and operational complexity.

Message Brokers and Streaming Platforms

Message brokers serve as the backbone of resilient data pipelines. Apache Kafka has become the de facto standard for high-throughput, distributed streaming, offering excellent durability, scalability, and replay capabilities. RabbitMQ provides robust message queuing with flexible routing options, while cloud-native solutions like AWS Kinesis and Azure Event Hubs offer managed services with built-in resilience.

When selecting a message broker, consider factors such as message ordering guarantees, persistence mechanisms, scalability characteristics, and operational complexity. The choice significantly impacts your pipeline’s resilience profile and operational overhead.

Time-Series Databases

Sensor data typically exhibits time-series characteristics, making specialized databases like InfluxDB, TimescaleDB, and Amazon Timestream excellent choices for storage. These systems optimize for time-based queries, provide efficient compression, and support retention policies that automatically manage data lifecycle.

Resilience features to look for include replication, automated backups, high availability configurations, and disaster recovery capabilities. Many modern time-series databases offer clustering and sharding to distribute load and provide failover protection.

Monitoring and Observability Tools

You cannot improve what you cannot measure. Comprehensive monitoring is essential for maintaining pipeline resilience. Tools like Prometheus, Grafana, ELK stack (Elasticsearch, Logstash, Kibana), and Datadog provide visibility into system health, performance metrics, and failure patterns.

Key metrics to monitor include message throughput, latency, error rates, buffer utilization, connection status, and data quality indicators. Alerting mechanisms should notify operators of anomalies before they escalate into critical failures.

Operational Excellence: Keeping Your Pipeline Healthy 💪

Building a resilient pipeline is only half the battle; maintaining it requires ongoing operational diligence and continuous improvement practices.

Automated Testing and Chaos Engineering

Regular testing validates that resilience mechanisms function as designed. Automated test suites should simulate various failure scenarios including network interruptions, service outages, high load conditions, and data corruption events.

Chaos engineering takes this further by intentionally introducing failures into production systems to validate resilience under real-world conditions. Tools like Chaos Monkey and Gremlin help identify weaknesses before they cause actual incidents.

Capacity Planning and Scalability

Resilient systems must accommodate growth without performance degradation. Regular capacity planning reviews ensure that buffers, network bandwidth, processing resources, and storage systems can handle projected data volumes with appropriate headroom.

Implementing auto-scaling for cloud components allows systems to dynamically adapt to changing loads. However, scaling policies should be carefully tuned to avoid over-provisioning costs while maintaining sufficient capacity for peak loads.

Documentation and Runbooks

Comprehensive documentation accelerates incident response and knowledge transfer. Runbooks should detail common failure scenarios, diagnostic procedures, and remediation steps. Including architecture diagrams, data flow illustrations, and configuration details helps operators quickly understand system behavior.

Regular runbook reviews and tabletop exercises ensure that teams remain prepared to handle incidents effectively, minimizing downtime and data loss when problems occur.

Security Considerations in Resilient Pipelines 🔐

Resilience and security are complementary concerns. A truly robust pipeline must protect data integrity and confidentiality while maintaining availability.

Implementing end-to-end encryption protects data in transit and at rest. Certificate-based authentication ensures that only authorized devices can send data to your pipeline. Regular security audits and vulnerability assessments identify potential weaknesses before they can be exploited.

Rate limiting and DDoS protection mechanisms prevent malicious actors from overwhelming your ingestion endpoints. These security controls enhance resilience by protecting against both accidental and intentional overload conditions.

Real-World Success Stories and Lessons Learned

Organizations across industries have successfully implemented resilient sensor-to-cloud pipelines, each learning valuable lessons along the way. Manufacturing facilities use these systems for predictive maintenance, reducing unplanned downtime by up to 50%. Smart cities deploy sensor networks that continue functioning despite infrastructure challenges, providing continuous monitoring of air quality, traffic patterns, and utility consumption.

Common lessons from successful implementations include starting with a minimum viable pipeline and iteratively adding resilience features, over-provisioning buffering capacity initially, implementing comprehensive monitoring from day one, and regularly testing failover mechanisms before they’re needed in production.

🎯 The Path Forward: Building Your Resilient Pipeline

Creating a robust sensor-to-cloud logging pipeline requires balancing multiple considerations: technical capabilities, operational requirements, budget constraints, and organizational expertise. The journey begins with clearly defining your resilience requirements based on business impact, data criticality, and acceptable loss thresholds.

Start by implementing foundational resilience features like local buffering, retry logic, and basic monitoring. Gradually layer on more sophisticated capabilities such as advanced routing, complex event processing, and machine learning-based anomaly detection as your system matures.

Remember that resilience is not a one-time achievement but an ongoing commitment. Systems evolve, requirements change, and new failure modes emerge. Regular reviews, continuous testing, and iterative improvements ensure that your pipeline remains robust as your needs grow.

The investment in building a resilient logging pipeline pays dividends through reduced downtime, improved data quality, lower operational stress, and increased confidence in your data-driven insights. As sensors continue proliferating and data volumes grow, organizations with robust collection infrastructure will maintain their competitive advantage in an increasingly connected world.

By following the principles and practices outlined in this article, you can build a logging pipeline that not only meets today’s requirements but adapts gracefully to tomorrow’s challenges, ensuring seamless data collection regardless of the obstacles encountered along the way.

Toni Santos is a meteorological researcher and atmospheric data specialist focusing on the study of airflow dynamics, citizen-based weather observation, and the computational models that decode cloud behavior. Through an interdisciplinary and sensor-focused lens, Toni investigates how humanity has captured wind patterns, atmospheric moisture, and climate signals — across landscapes, technologies, and distributed networks. His work is grounded in a fascination with atmosphere not only as phenomenon, but as carrier of environmental information. From airflow pattern capture systems to cloud modeling and distributed sensor networks, Toni uncovers the observational and analytical tools through which communities preserve their relationship with the atmospheric unknown. With a background in weather instrumentation and atmospheric data history, Toni blends sensor analysis with field research to reveal how weather data is used to shape prediction, transmit climate patterns, and encode environmental knowledge. As the creative mind behind dralvynas, Toni curates illustrated atmospheric datasets, speculative airflow studies, and interpretive cloud models that revive the deep methodological ties between weather observation, citizen technology, and data-driven science. His work is a tribute to: The evolving methods of Airflow Pattern Capture Technology The distributed power of Citizen Weather Technology and Networks The predictive modeling of Cloud Interpretation Systems The interconnected infrastructure of Data Logging Networks and Sensors Whether you're a weather historian, atmospheric researcher, or curious observer of environmental data wisdom, Toni invites you to explore the hidden layers of climate knowledge — one sensor, one airflow, one cloud pattern at a time.