Edge AI is revolutionizing how businesses process data by enabling real-time analysis at the source, transforming cloud interpretation and decision-making capabilities across industries.

🚀 The Convergence of Edge Computing and Artificial Intelligence

The digital transformation landscape is experiencing a paradigm shift as organizations recognize the limitations of traditional cloud-only architectures. Edge AI represents the marriage of artificial intelligence with edge computing, bringing computational power closer to data sources. This technological convergence addresses critical challenges including latency, bandwidth constraints, and privacy concerns that have hindered real-time applications.

Traditional cloud computing models require data to travel from sensors and devices to centralized data centers for processing. This journey introduces delays that can range from milliseconds to seconds—an eternity for applications requiring instantaneous responses. Edge AI eliminates these bottlenecks by processing data locally, at the network’s edge, before transmitting only relevant insights to the cloud for deeper analysis and long-term storage.

The synergy between edge and cloud creates a hybrid intelligence architecture that leverages the strengths of both environments. While edge devices handle time-sensitive processing, cloud infrastructure provides the computational muscle for complex machine learning model training, historical data analysis, and coordinated insights across multiple edge locations.

Understanding the Edge AI Architecture

Edge AI systems consist of multiple layers working in concert to deliver real-time intelligence. At the foundation lie edge devices—sensors, cameras, IoT gadgets, and specialized hardware equipped with processing capabilities. These devices run lightweight AI models optimized for resource-constrained environments, performing inference tasks with minimal power consumption.

The middleware layer facilitates communication between edge devices and cloud infrastructure, managing data flow, security protocols, and model updates. This layer ensures seamless synchronization while implementing intelligent filtering to reduce unnecessary data transmission. Only actionable insights, anomalies, or aggregated summaries typically reach cloud servers, dramatically reducing bandwidth requirements.

Cloud infrastructure serves as the brain of the operation, hosting sophisticated machine learning pipelines, data lakes, and analytics platforms. Here, data scientists refine models based on aggregated edge data, deploy updates across device fleets, and generate strategic insights that inform business decisions. This continuous feedback loop between edge and cloud enables systems to improve over time.

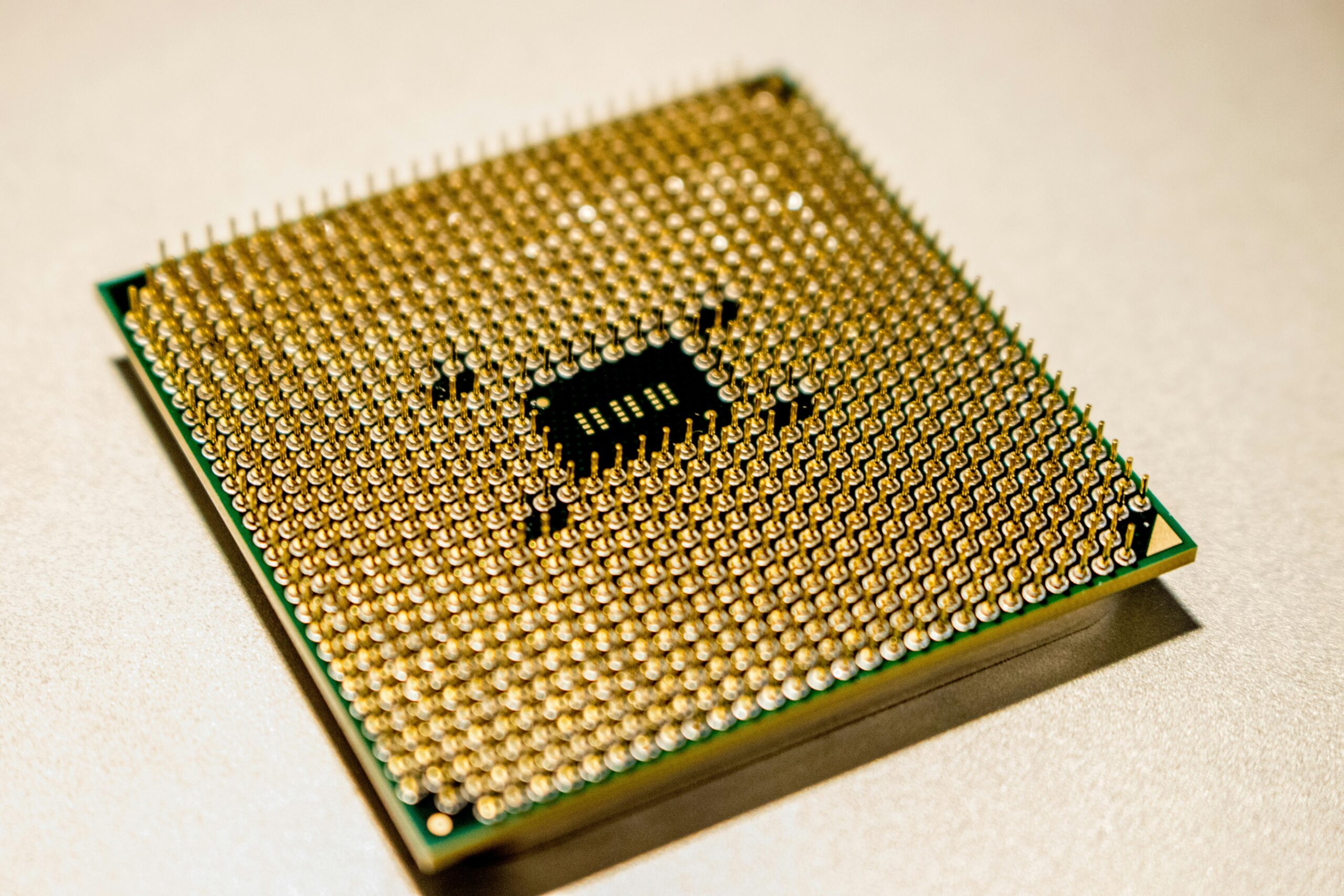

Hardware Acceleration at the Edge

Modern edge AI relies heavily on specialized hardware designed for efficient neural network execution. Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), and Neural Processing Units (NPUs) enable complex computations within tight power budgets. Companies like NVIDIA, Intel, and ARM have developed dedicated edge AI chipsets that balance performance with energy efficiency.

These accelerators use techniques like quantization, pruning, and knowledge distillation to compress large neural networks into compact models suitable for deployment on resource-limited devices. An AI model that might require gigabytes of memory in the cloud can be optimized to run on edge hardware with mere megabytes of capacity, while maintaining acceptable accuracy levels.

💡 Real-Time Insights Transforming Industry Verticals

The manufacturing sector has emerged as an early adopter of edge AI technology, deploying computer vision systems for quality control and predictive maintenance. Cameras equipped with AI processors inspect products at production line speeds, identifying defects that human inspectors might miss. These systems make accept-or-reject decisions in microseconds, preventing defective items from progressing through manufacturing workflows.

Predictive maintenance applications analyze vibration patterns, temperature fluctuations, and acoustic signatures from industrial equipment to forecast failures before they occur. By processing sensor data locally, edge AI systems detect anomalies immediately and trigger alerts, preventing costly downtime. Cloud platforms aggregate these insights across facilities, identifying systemic issues and optimizing maintenance schedules enterprise-wide.

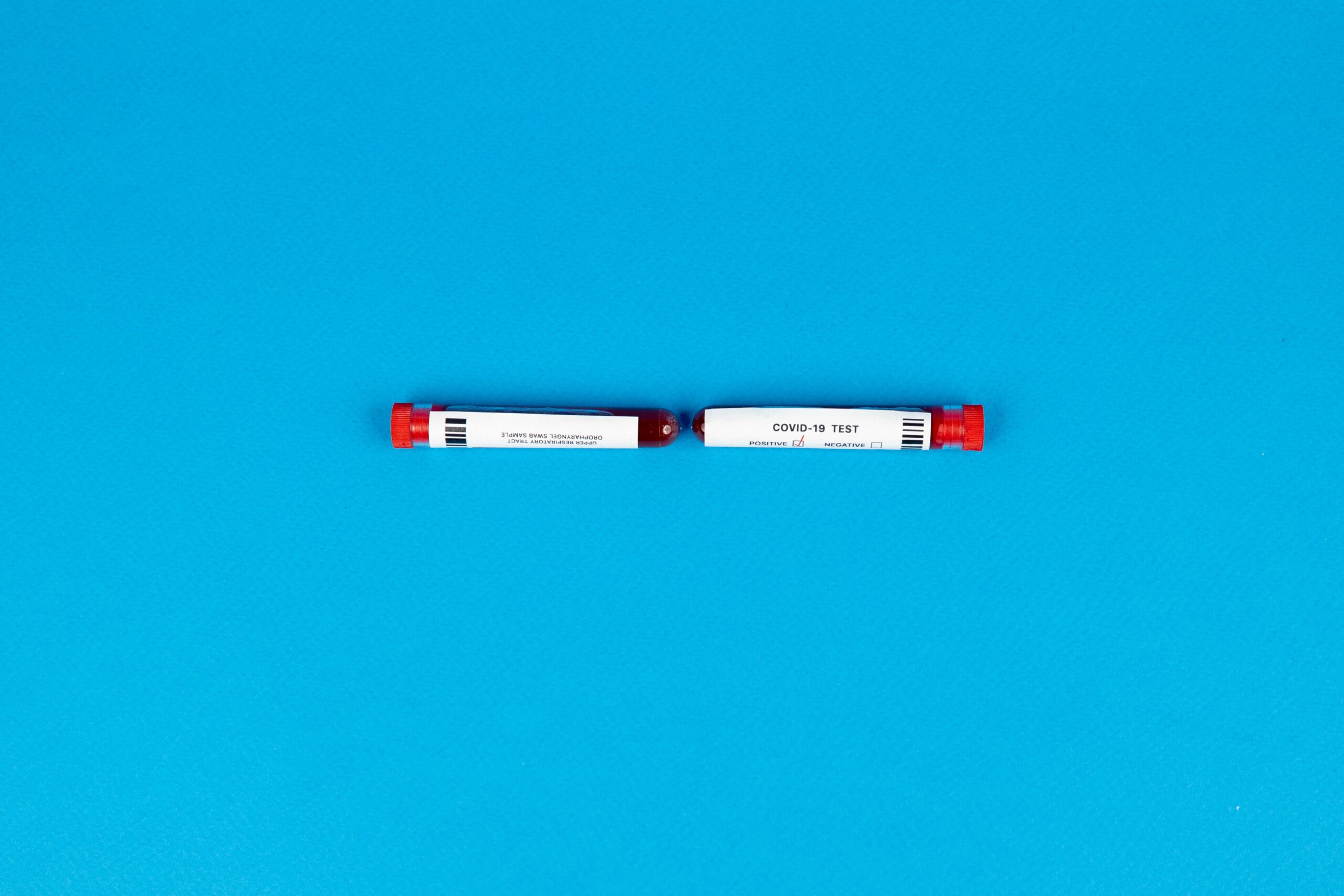

Healthcare Revolution Through Distributed Intelligence

Medical applications demand both real-time responsiveness and stringent privacy protections—requirements perfectly suited to edge AI architectures. Wearable health monitors analyze vital signs continuously, detecting cardiac arrhythmias, falls, or concerning trends without transmitting sensitive patient data to external servers. Only when anomalies are detected do these devices communicate alerts to healthcare providers.

Hospitals deploy edge AI for patient monitoring systems that track dozens of parameters simultaneously. These systems reduce alarm fatigue by filtering false positives locally and escalating only genuine concerns. Surgical robotics benefit from edge processing that provides the sub-millisecond latency required for precise instrument control, while cloud platforms support surgical planning and outcome analysis.

Autonomous Systems and Smart Transportation

Self-driving vehicles represent perhaps the most demanding edge AI application, requiring split-second decisions based on sensor fusion from cameras, lidar, radar, and GPS. Processing terabytes of sensor data in the cloud would introduce unacceptable latency, making edge computing essential for autonomous navigation. Vehicles make immediate decisions about steering, acceleration, and braking while uploading driving experiences to cloud platforms for fleet learning.

Smart city infrastructure leverages edge AI for traffic management, optimizing signal timing based on real-time vehicle and pedestrian flow. Intelligent cameras identify congestion, accidents, and safety violations, coordinating responses across intersections. Cloud analytics identify broader traffic patterns, informing urban planning and infrastructure investments.

Overcoming Technical Challenges in Edge AI Deployment

Implementing edge AI at scale presents unique challenges that organizations must address. Model optimization remains a critical concern, as state-of-the-art AI models developed in cloud environments often prove too resource-intensive for edge deployment. Data scientists must balance accuracy against computational efficiency, sometimes accepting slightly reduced performance for dramatic improvements in speed and power consumption.

Edge device management across distributed deployments introduces operational complexity. Organizations need robust systems for remote monitoring, troubleshooting, and updates. Over-the-air update mechanisms must deliver new AI models and security patches without disrupting operations, while rollback capabilities ensure problematic updates can be quickly reversed.

Security and Privacy Considerations

Edge AI offers inherent privacy advantages by processing sensitive data locally rather than transmitting it to centralized servers. However, distributed deployments expand the attack surface, creating numerous potential entry points for malicious actors. Each edge device requires robust security measures including encrypted storage, secure boot processes, and tamper detection.

Data minimization principles guide edge AI architectures, ensuring only necessary information leaves devices. Differential privacy techniques can add mathematical guarantees that individual data points cannot be reconstructed from transmitted aggregates. Federated learning approaches enable model improvement across device fleets without centralizing training data, preserving privacy while maintaining AI performance.

🔧 The Technology Stack Powering Edge Intelligence

Software frameworks specifically designed for edge AI have emerged to simplify development and deployment. TensorFlow Lite, PyTorch Mobile, and ONNX Runtime provide optimized inference engines that run efficiently on mobile and embedded processors. These frameworks support automatic model conversion from training formats to edge-compatible versions, handling quantization and optimization transparently.

Container technologies like Docker and Kubernetes have been adapted for edge environments, enabling consistent deployment across heterogeneous hardware. Edge-specific orchestration platforms manage application lifecycles across thousands of distributed nodes, handling updates, scaling, and failover automatically. These platforms integrate with cloud-native tools, creating unified management interfaces spanning edge and cloud infrastructure.

Connectivity and Data Synchronization

Edge AI systems must function reliably despite intermittent connectivity, processing data locally when network access is unavailable. Intelligent buffering and synchronization protocols ensure no data loss during outages while preventing overwhelming bandwidth when connections restore. Time-series databases optimized for edge environments efficiently store and compress local data before cloud transmission.

5G networks are dramatically improving edge AI capabilities by providing high-bandwidth, low-latency connectivity that blurs the line between edge and cloud. Multi-access edge computing (MEC) architectures place computational resources within cellular networks themselves, enabling ultra-low-latency processing for mobile applications. This network-edge hybrid approach combines the benefits of local processing with the flexibility of cloud-like infrastructure.

Measuring Success: KPIs for Edge AI Implementations

Organizations must establish clear metrics to evaluate edge AI performance and return on investment. Latency reduction represents a primary benefit, measured by comparing response times before and after edge AI deployment. Applications requiring real-time decisions should demonstrate response times measured in milliseconds rather than seconds.

Bandwidth savings provide tangible cost benefits, particularly for organizations with numerous remote locations or expensive connectivity. By processing data locally and transmitting only insights, edge AI can reduce bandwidth consumption by 90% or more compared to cloud-only approaches. These savings translate directly to reduced data transfer costs and improved network reliability.

Accuracy and reliability metrics ensure edge AI systems meet operational requirements. Organizations should track false positive and false negative rates for classification tasks, mean absolute error for regression problems, and system uptime percentages. Comparing edge model performance against cloud-based counterparts helps validate optimization efforts maintain acceptable accuracy.

Business Impact Assessment

Beyond technical metrics, edge AI should deliver measurable business value. Manufacturing implementations might track defect detection rates, production throughput improvements, or maintenance cost reductions. Retail deployments could measure customer experience enhancements, inventory accuracy improvements, or shrinkage reduction from loss prevention systems.

Energy efficiency represents both an operational and environmental benefit. Edge AI can significantly reduce energy consumption compared to cloud-centric approaches by eliminating data transmission overhead and enabling localized optimization. Organizations should quantify energy savings and calculate corresponding cost reductions and carbon footprint improvements.

🌐 The Future Landscape of Distributed Intelligence

Edge AI continues evolving rapidly, with several trends shaping its future trajectory. Neuromorphic computing chips designed to mimic biological neural networks promise dramatic efficiency improvements for edge AI workloads. These specialized processors could enable sophisticated AI capabilities in ultra-low-power devices, expanding edge intelligence to applications previously considered impractical.

Automated machine learning (AutoML) tools are being adapted for edge environments, enabling domain experts without deep AI expertise to develop and deploy custom models. These platforms automatically select architectures, optimize hyperparameters, and compress models for edge deployment, democratizing AI development beyond specialized data science teams.

Integration with Extended Reality

Augmented and virtual reality applications demand the low latency and high bandwidth that edge AI provides. AR glasses that overlay contextual information on the real world require instant object recognition and scene understanding—capabilities that edge processing enables. As XR devices become more prevalent, edge AI will power immersive experiences in training, maintenance, gaming, and collaboration.

Digital twins—virtual replicas of physical assets updated in real-time—represent a convergence point for edge AI and cloud interpretation. Edge sensors continuously feed data to cloud-based simulations, while AI models running at the edge make immediate operational decisions. This bidirectional intelligence flow enables predictive optimization and what-if scenario planning across complex systems.

Building an Edge AI Strategy for Your Organization

Organizations embarking on edge AI journeys should begin with clear use case identification. Not every application benefits from edge processing—the ideal candidates involve real-time requirements, privacy concerns, bandwidth limitations, or offline operation needs. Conduct a thorough analysis of existing workflows to identify bottlenecks and opportunities where edge AI delivers meaningful advantages.

Start with pilot projects that demonstrate value quickly while limiting risk. Select use cases with well-defined success metrics and manageable scope. These initial implementations provide learning opportunities, helping teams develop expertise in edge AI deployment, operations, and optimization before scaling to enterprise-wide deployments.

Partner selection proves critical for edge AI success. Hardware vendors, software platforms, system integrators, and managed service providers each play important roles. Evaluate partners based on their edge AI experience, technology ecosystem compatibility, and long-term commitment to the space. Open standards and interoperability should guide platform choices to avoid vendor lock-in.

Skills and Team Development

Edge AI requires multidisciplinary teams combining data science, software engineering, hardware expertise, and operational knowledge. Invest in training existing staff on edge technologies while recruiting specialists in key areas. Foster collaboration between teams traditionally siloed—data scientists working alongside embedded systems engineers and operations personnel.

Establish centers of excellence that develop best practices, reusable components, and reference architectures. These centers accelerate subsequent projects by providing proven patterns and reducing reinvention. Create feedback loops that capture lessons learned from deployments, continuously improving organizational edge AI capabilities.

⚡ Maximizing the Edge-Cloud Synergy

The most powerful implementations recognize that edge and cloud aren’t competing approaches but complementary components of a unified intelligence architecture. Design systems that leverage each environment’s strengths—edge for real-time responsiveness and privacy, cloud for computational intensity and coordination. This hybrid approach delivers capabilities neither environment could achieve independently.

Implement intelligent data governance that determines what information stays local, what transmits to the cloud, and what retention policies apply. Privacy regulations like GDPR and CCPA influence these decisions, as do bandwidth costs and storage limitations. Create tiered storage strategies where edge devices maintain recent data for local analysis while cloud platforms preserve long-term historical records.

Edge AI represents more than a technological advancement—it’s a fundamental shift in how we architect intelligent systems. By processing data where it’s created, we unlock real-time insights previously impossible, enable new applications that demand instant responsiveness, and create privacy-preserving solutions for sensitive domains. The organizations that successfully harness edge AI’s power will gain competitive advantages through faster decision-making, reduced operational costs, and enhanced customer experiences.

As edge AI technology matures and becomes more accessible, its adoption will accelerate across industries. The convergence with 5G networks, advanced chipsets, and sophisticated software frameworks removes barriers to entry, allowing organizations of all sizes to benefit from distributed intelligence. The future belongs to systems that intelligently distribute computation across the edge-cloud continuum, extracting maximum value from data wherever it resides.

Toni Santos is a meteorological researcher and atmospheric data specialist focusing on the study of airflow dynamics, citizen-based weather observation, and the computational models that decode cloud behavior. Through an interdisciplinary and sensor-focused lens, Toni investigates how humanity has captured wind patterns, atmospheric moisture, and climate signals — across landscapes, technologies, and distributed networks. His work is grounded in a fascination with atmosphere not only as phenomenon, but as carrier of environmental information. From airflow pattern capture systems to cloud modeling and distributed sensor networks, Toni uncovers the observational and analytical tools through which communities preserve their relationship with the atmospheric unknown. With a background in weather instrumentation and atmospheric data history, Toni blends sensor analysis with field research to reveal how weather data is used to shape prediction, transmit climate patterns, and encode environmental knowledge. As the creative mind behind dralvynas, Toni curates illustrated atmospheric datasets, speculative airflow studies, and interpretive cloud models that revive the deep methodological ties between weather observation, citizen technology, and data-driven science. His work is a tribute to: The evolving methods of Airflow Pattern Capture Technology The distributed power of Citizen Weather Technology and Networks The predictive modeling of Cloud Interpretation Systems The interconnected infrastructure of Data Logging Networks and Sensors Whether you're a weather historian, atmospheric researcher, or curious observer of environmental data wisdom, Toni invites you to explore the hidden layers of climate knowledge — one sensor, one airflow, one cloud pattern at a time.