Edge computing is revolutionizing how businesses collect, process, and analyze data in real-time, bringing computational power closer to data sources.

The digital transformation wave has pushed organizations to rethink their data infrastructure strategies. Traditional cloud-based models, while powerful, often struggle with latency issues and bandwidth constraints when dealing with massive volumes of data generated by IoT devices, sensors, and distributed systems. This is where edge computing emerges as a game-changing solution, fundamentally altering how we approach data logging and analytics.

Data logging has always been critical for business intelligence, compliance, troubleshooting, and system optimization. However, the exponential growth in data generation—estimated to reach 175 zettabytes globally by 2025—demands smarter, more efficient approaches. Edge computing addresses these challenges by processing data at or near the source, reducing latency, minimizing bandwidth usage, and enabling real-time decision-making that simply wasn’t possible before.

🚀 Understanding Edge Computing in the Data Logging Landscape

Edge computing represents a distributed computing paradigm that brings computation and data storage closer to the locations where it’s needed. Rather than sending all raw data to centralized cloud servers or data centers, edge devices process information locally, transmitting only relevant insights or aggregated data to the cloud.

This architectural shift has profound implications for data logging practices. Traditional logging systems often faced the challenge of capturing everything and sorting through it later—an approach that’s increasingly unsustainable with modern data volumes. Edge computing enables intelligent filtering at the source, ensuring that only meaningful data is logged and transmitted.

The edge computing ecosystem typically consists of edge devices (sensors, IoT endpoints, gateways), edge servers or micro data centers positioned close to data sources, and cloud infrastructure for long-term storage and advanced analytics. This multi-tiered architecture creates opportunities for sophisticated data logging strategies that balance local processing with centralized oversight.

💡 The Strategic Advantages of Edge-Enhanced Data Logging

Implementing edge computing for data logging delivers multiple strategic benefits that directly impact operational efficiency and business intelligence capabilities.

Dramatic Latency Reduction

When data doesn’t need to travel to distant cloud servers for processing, response times shrink from hundreds of milliseconds to single-digit milliseconds or less. For applications requiring immediate insights—manufacturing quality control, autonomous vehicles, financial trading systems—this latency reduction isn’t just beneficial; it’s mission-critical. Edge-based data logging enables split-second decisions based on the most current information available.

Bandwidth Optimization and Cost Savings

Transmitting massive volumes of raw data to the cloud consumes significant bandwidth and incurs substantial costs. Edge computing addresses this by processing and filtering data locally, sending only relevant information upstream. A manufacturing facility with thousands of sensors, for example, might generate terabytes of data daily, but with intelligent edge processing, only megabytes of actionable insights need cloud transmission. This optimization translates directly to reduced networking costs and improved system efficiency.

Enhanced Data Privacy and Security

Keeping sensitive data closer to its source reduces exposure to potential security breaches during transmission. Edge computing enables privacy-preserving data logging strategies where personally identifiable information (PII) or proprietary data can be processed and anonymized locally before any cloud transmission occurs. This approach simplifies compliance with regulations like GDPR, HIPAA, and CCPA while maintaining valuable analytics capabilities.

Improved Reliability and Resilience

Edge computing architectures continue functioning even when cloud connectivity is interrupted. This resilience is crucial for critical applications where data logging cannot be compromised by network outages. Edge devices can buffer data locally during connectivity issues and synchronize with central systems once connections are restored, ensuring no data loss while maintaining operational continuity.

📊 Practical Applications Transforming Industries

Edge computing’s impact on data logging extends across diverse industry verticals, each benefiting from reduced latency and smarter data management.

Manufacturing and Industrial IoT

Smart factories deploy thousands of sensors monitoring equipment performance, product quality, environmental conditions, and supply chain logistics. Edge computing enables real-time data logging that detects anomalies instantly—identifying equipment failures before they occur, catching quality defects on the production line, and optimizing energy consumption. Manufacturers using edge-enhanced logging report 30-50% reductions in unplanned downtime and significant improvements in overall equipment effectiveness (OEE).

Healthcare and Medical Devices

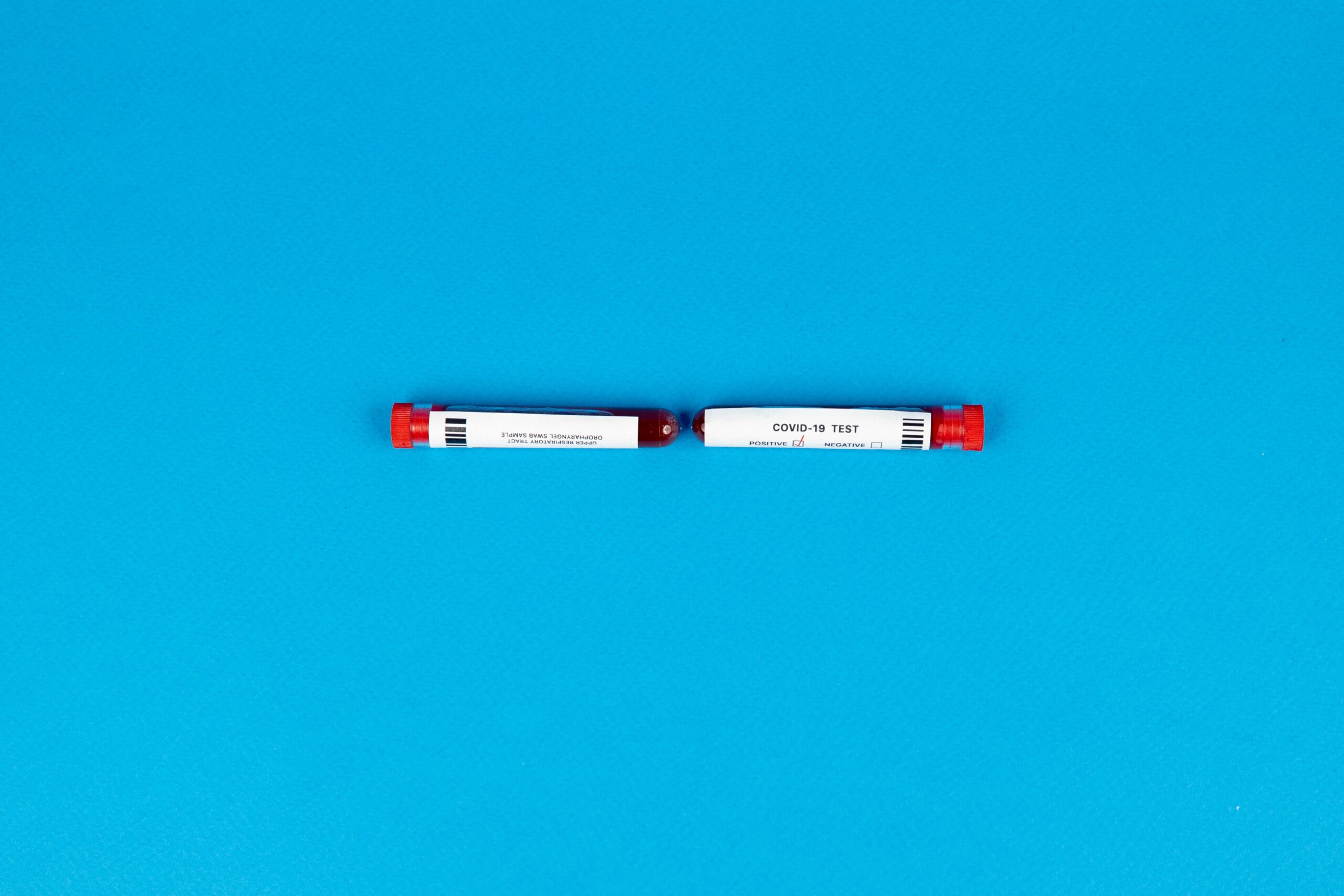

Medical IoT devices generate continuous streams of patient data requiring immediate analysis. Edge computing enables real-time monitoring of vital signs with intelligent logging that alerts healthcare providers to concerning trends instantly. Wearable devices and remote patient monitoring systems process health data locally, logging only significant events or changes that warrant clinical attention, while maintaining patient privacy through local data processing.

Retail and Customer Experience

Modern retail environments use edge computing for intelligent video analytics, inventory management, and customer behavior tracking. Edge-based data logging captures foot traffic patterns, dwell times, and engagement metrics in real-time, enabling dynamic pricing, personalized recommendations, and optimized store layouts. This approach processes video data locally, extracting behavioral insights without transmitting raw video footage to the cloud, addressing both bandwidth and privacy concerns.

Transportation and Autonomous Vehicles

Connected vehicles generate approximately 4 terabytes of data per day. Edge computing makes autonomous driving viable by processing sensor data locally for immediate navigation decisions while logging only relevant information for fleet management, maintenance predictions, and system improvements. This selective logging approach makes autonomous systems both responsive and sustainable.

🔧 Implementing Edge Computing for Superior Data Logging

Successfully deploying edge computing for enhanced data logging requires thoughtful planning and execution across several key dimensions.

Architecture Design Principles

Effective edge computing architectures balance local processing capabilities with centralized oversight. Start by identifying which data requires immediate processing and which can tolerate latency. Design logging strategies that capture high-frequency events at the edge while aggregating and transmitting summaries to the cloud. Implement hierarchical storage approaches where recent data remains at the edge for quick access while historical data migrates to cloud storage.

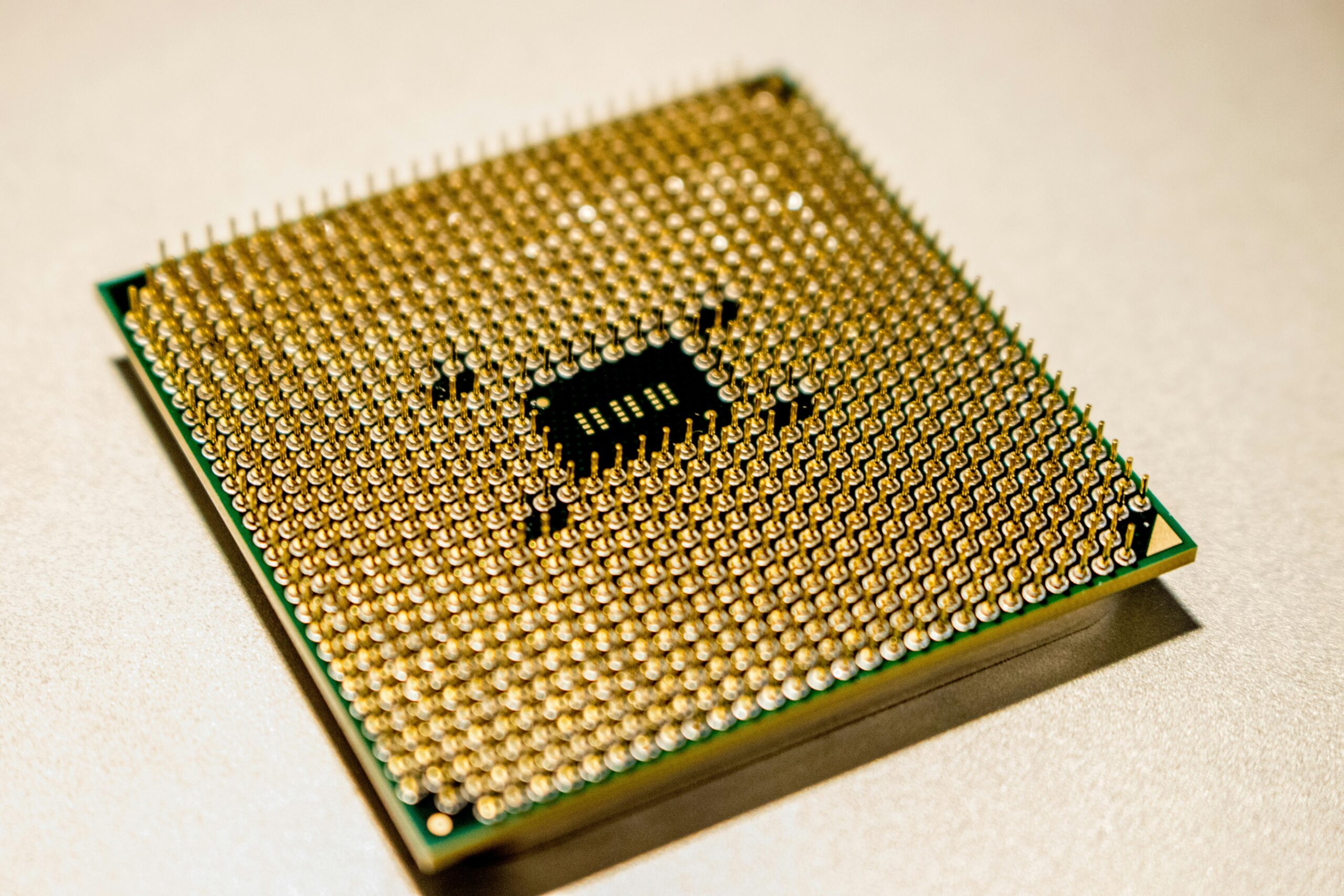

Selecting Appropriate Hardware

Edge devices range from simple microcontrollers to powerful edge servers with GPU acceleration. Hardware selection depends on processing requirements, environmental conditions, power constraints, and budget considerations. Industrial applications might require ruggedized devices withstanding extreme temperatures and vibrations, while retail environments might prioritize compact, aesthetically pleasing form factors. Ensure selected hardware provides adequate storage for local data buffering during connectivity disruptions.

Software and Platform Considerations

Choose edge computing platforms offering robust data logging capabilities, including structured and unstructured data support, time-series databases optimized for IoT workloads, and flexible APIs for integration with existing systems. Popular platforms include AWS IoT Greengrass, Azure IoT Edge, Google Cloud IoT Edge, and open-source alternatives like EdgeX Foundry. These platforms provide containerized application deployment, device management, and seamless cloud integration.

Data Governance and Quality Management

Establishing clear data governance policies ensures consistency across distributed edge deployments. Define standardized logging formats, retention policies, and data quality validation rules. Implement metadata tagging strategies that enable effective data discovery and lineage tracking. Edge computing complicates governance by distributing data management responsibilities, making clear policies and automated enforcement mechanisms essential.

⚡ Overcoming Implementation Challenges

While edge computing offers substantial benefits, organizations must navigate several challenges when enhancing data logging capabilities.

Managing Distributed Complexity

Edge computing inherently increases system complexity by distributing processing across numerous locations. Organizations need robust monitoring and management tools providing visibility into edge device health, performance metrics, and data logging status. Centralized dashboards aggregating telemetry from distributed edge nodes help operations teams maintain system health and quickly identify issues requiring attention.

Ensuring Consistent Updates and Security

Keeping edge devices updated with the latest software, security patches, and logging configurations becomes challenging at scale. Implement automated over-the-air (OTA) update mechanisms with rollback capabilities to manage edge device software. Adopt zero-trust security models where edge devices authenticate continuously and access controls are enforced at every level. Encrypt logged data both in transit and at rest, even at the edge.

Balancing Processing Capabilities

Determining the optimal division of processing between edge and cloud requires careful analysis. Over-processing at the edge may result in unnecessarily expensive hardware, while under-processing defeats the purpose of edge computing. Profile your data logging and analytics requirements to identify the appropriate processing distribution. Start with simpler filtering and aggregation at the edge, progressively moving more sophisticated analytics as requirements and capabilities evolve.

Standardization and Interoperability

The edge computing ecosystem includes diverse devices, protocols, and platforms. Adopting industry standards like MQTT for messaging, OPC UA for industrial communication, and containerization for application deployment improves interoperability and reduces vendor lock-in. Open standards facilitate integration between edge devices from different manufacturers and simplify data logging pipeline development.

🎯 Advanced Techniques for Smarter Insights

Beyond basic data collection, edge computing enables sophisticated analytics approaches that transform raw logs into actionable intelligence.

Machine Learning at the Edge

Deploying trained machine learning models directly on edge devices enables intelligent data logging that recognizes patterns, detects anomalies, and predicts outcomes in real-time. Edge ML models can identify which events warrant detailed logging and which represent normal operations requiring only summary statistics. TensorFlow Lite, PyTorch Mobile, and specialized ML accelerators make sophisticated inference feasible on resource-constrained edge hardware.

Event-Driven Logging Strategies

Rather than logging continuously at fixed intervals, event-driven approaches capture data when specific conditions occur. This selective logging dramatically reduces data volumes while ensuring critical events are never missed. Define intelligent triggers based on threshold violations, pattern recognition, or contextual rules. Event-driven logging paired with edge processing creates efficient systems that log comprehensively when it matters while remaining quiet during normal operations.

Real-Time Data Enrichment

Edge computing enables data enrichment at the source, adding valuable context before transmission or storage. Combine sensor readings with location data, environmental conditions, operational states, or external data sources to create richer, more meaningful logs. This enrichment at the edge reduces the need for complex post-processing and enables more immediate insights from logged data.

Adaptive Sampling and Compression

Implement intelligent sampling algorithms that adjust logging frequency based on current conditions. When systems operate normally, reduce sampling rates and apply aggressive compression. When anomalies are detected, automatically increase logging granularity to capture detailed diagnostic information. This adaptive approach optimizes the trade-off between data completeness and resource efficiency.

📈 Measuring Success and Continuous Improvement

Establishing key performance indicators helps organizations quantify the value of edge-enhanced data logging and guide ongoing optimization efforts.

Track latency metrics comparing edge-processed insights against traditional cloud-only approaches. Monitor bandwidth utilization and associated costs before and after edge implementation. Measure data logging completeness, ensuring critical events are captured despite volume reductions. Assess system reliability through uptime statistics and successful data synchronization rates during connectivity challenges.

Calculate the business impact of faster insights—reduced downtime, improved quality metrics, enhanced customer experiences, or operational cost savings. These outcome-based metrics often provide the most compelling justification for edge computing investments.

Implement continuous monitoring of edge device health, including CPU and memory utilization, storage capacity, network connectivity, and logging pipeline performance. Establish alerting thresholds that notify operations teams of potential issues before they impact data collection. Regular analysis of logging patterns helps identify optimization opportunities and informs future architecture decisions.

🌟 The Future of Edge Computing and Data Intelligence

The convergence of edge computing, artificial intelligence, and advanced analytics is creating unprecedented opportunities for data-driven decision-making.

Emerging 5G networks dramatically expand edge computing capabilities with higher bandwidth, lower latency, and support for massive device connectivity. This infrastructure evolution enables more sophisticated edge processing and richer data logging scenarios. Edge AI chips specifically designed for machine learning inference are becoming more powerful and energy-efficient, making advanced analytics feasible on increasingly compact devices.

Federated learning approaches enable training machine learning models across distributed edge devices without centralizing raw data, combining the privacy benefits of edge computing with the power of collaborative learning. This technique is particularly promising for applications requiring personalization while maintaining data privacy.

Digital twins—virtual representations of physical assets—benefit tremendously from edge computing. Real-time data logging from edge devices feeds digital twin models, enabling sophisticated simulations, predictive maintenance, and optimization scenarios that would be impossible with cloud-only architectures.

The integration of blockchain technology with edge computing offers interesting possibilities for tamper-proof, distributed data logging in applications requiring strong audit trails and trust guarantees across organizational boundaries.

🔑 Strategic Recommendations for Getting Started

Organizations beginning their edge computing journey should adopt a phased approach that builds capabilities incrementally while delivering measurable value.

Start with pilot projects focusing on specific use cases with clear business value and manageable scope. Manufacturing predictive maintenance, retail customer analytics, or fleet management represent excellent starting points. These pilots provide learning opportunities and proof points without requiring organization-wide transformation.

Invest in team capabilities through training on edge computing architectures, IoT protocols, distributed systems management, and edge-appropriate software development practices. Building internal expertise ensures sustainable, well-maintained edge deployments.

Partner with experienced vendors and system integrators who can guide architecture decisions, recommend appropriate technologies, and help avoid common pitfalls. The edge computing ecosystem is maturing rapidly, and leveraging external expertise accelerates implementation and reduces risk.

Adopt cloud-native practices including containerization, infrastructure-as-code, and DevOps methodologies for edge deployments. These practices improve deployment consistency, simplify updates, and facilitate scaling as edge computing adoption grows.

Design for scalability from the beginning, even if initial deployments are small. Edge computing often grows rapidly once organizations experience its benefits, and architectures that don’t accommodate scaling become bottlenecks. Choose platforms and approaches that support growth from dozens to thousands of edge devices.

The transformation enabled by edge computing represents more than a technological upgrade—it’s a fundamental shift in how organizations approach data, analytics, and decision-making. By bringing computation closer to data sources, edge computing makes data logging more efficient, insights more timely, and systems more responsive. Organizations that successfully harness edge computing for enhanced data logging position themselves to compete effectively in an increasingly data-driven, real-time business environment. The power of edge computing isn’t just in the technology itself, but in the smarter, faster, more actionable insights it enables across every aspect of modern operations.

Toni Santos is a meteorological researcher and atmospheric data specialist focusing on the study of airflow dynamics, citizen-based weather observation, and the computational models that decode cloud behavior. Through an interdisciplinary and sensor-focused lens, Toni investigates how humanity has captured wind patterns, atmospheric moisture, and climate signals — across landscapes, technologies, and distributed networks. His work is grounded in a fascination with atmosphere not only as phenomenon, but as carrier of environmental information. From airflow pattern capture systems to cloud modeling and distributed sensor networks, Toni uncovers the observational and analytical tools through which communities preserve their relationship with the atmospheric unknown. With a background in weather instrumentation and atmospheric data history, Toni blends sensor analysis with field research to reveal how weather data is used to shape prediction, transmit climate patterns, and encode environmental knowledge. As the creative mind behind dralvynas, Toni curates illustrated atmospheric datasets, speculative airflow studies, and interpretive cloud models that revive the deep methodological ties between weather observation, citizen technology, and data-driven science. His work is a tribute to: The evolving methods of Airflow Pattern Capture Technology The distributed power of Citizen Weather Technology and Networks The predictive modeling of Cloud Interpretation Systems The interconnected infrastructure of Data Logging Networks and Sensors Whether you're a weather historian, atmospheric researcher, or curious observer of environmental data wisdom, Toni invites you to explore the hidden layers of climate knowledge — one sensor, one airflow, one cloud pattern at a time.