The age-old debate of human intuition versus computational precision has reached new dimensions as artificial intelligence models increasingly challenge human performance across diverse domains. 🤖

The Evolution of Machine Learning Benchmarks

For decades, researchers have sought to quantify and compare the capabilities of automated systems against human cognition. What began as simple pattern recognition tasks has evolved into sophisticated evaluations spanning medical diagnosis, language understanding, visual perception, and complex decision-making scenarios.

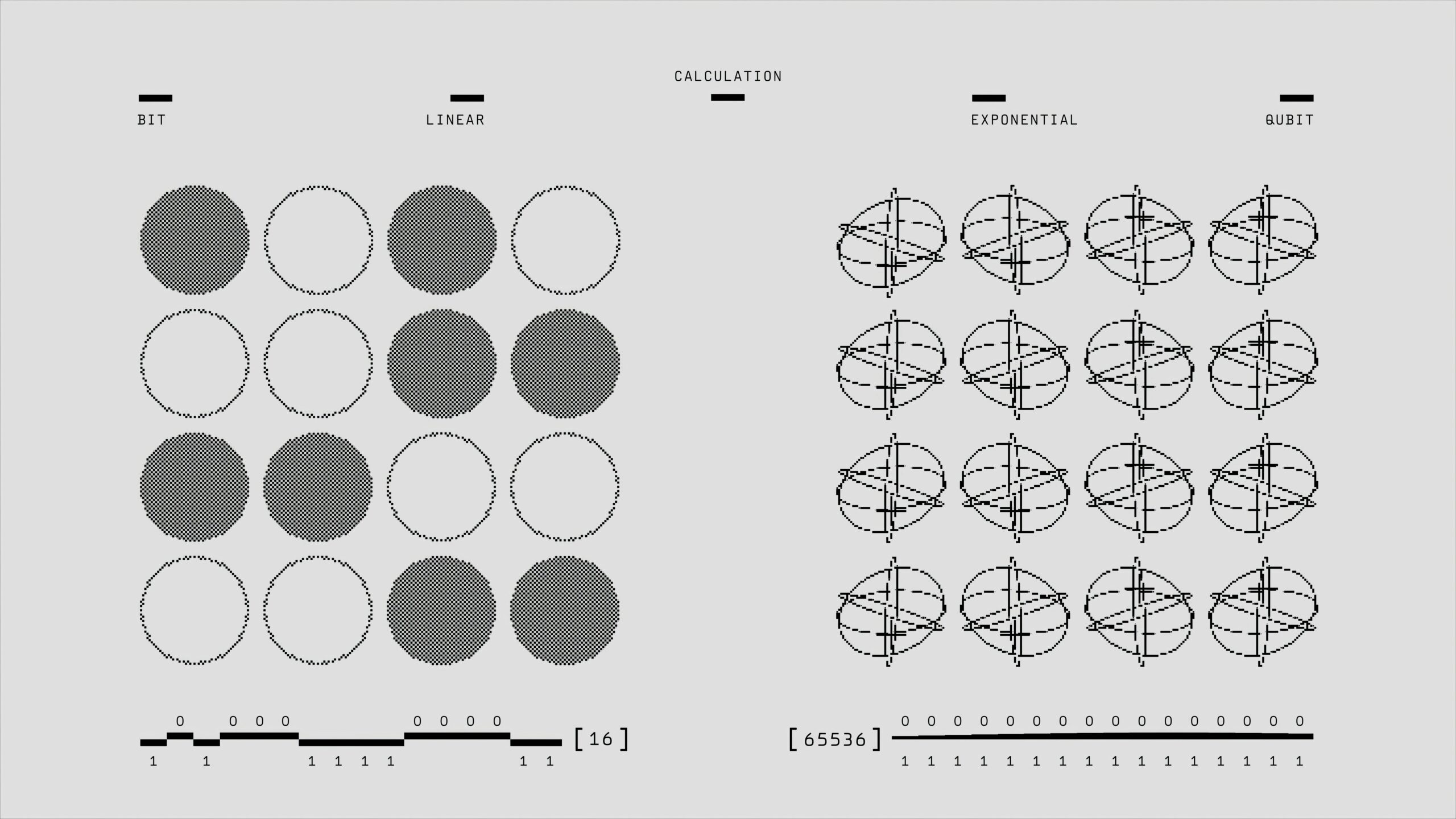

Machine learning models, particularly deep neural networks, have demonstrated remarkable proficiency in structured tasks. However, the question remains: are these systems genuinely achieving human-level understanding, or are they simply exploiting statistical regularities in training data?

The assessment of model accuracy compared to human observers requires careful consideration of multiple factors including task complexity, domain expertise, contextual understanding, and the ability to generalize beyond training examples. Each dimension reveals different strengths and limitations of both biological and artificial intelligence.

Measuring Performance: Beyond Simple Accuracy Metrics 📊

Traditional accuracy measurements—the percentage of correct predictions—provide only a superficial understanding of comparative performance. Human observers and machine learning models often make different types of errors, reflecting fundamentally distinct processing mechanisms.

Humans excel at leveraging contextual cues, prior knowledge, and common sense reasoning. A radiologist examining an X-ray doesn’t merely identify patterns; they integrate patient history, anatomical knowledge, and clinical experience. Conversely, machine learning models demonstrate consistency and scalability, processing thousands of cases without fatigue-induced degradation.

Precision, Recall, and the Trade-off Dilemma

When comparing human and machine performance, precision and recall metrics offer deeper insights. Precision measures the proportion of positive identifications that are actually correct, while recall indicates how many true positives were successfully identified.

Medical screening applications illustrate this trade-off perfectly. A highly sensitive model (high recall) might flag numerous potential abnormalities, including many false positives, requiring human verification. A highly specific model (high precision) might miss subtle cases that experienced clinicians would catch.

Human observers naturally calibrate this balance based on risk assessment and contextual factors—something that requires explicit programming or training in artificial systems.

Domain-Specific Performance Landscapes

The relative performance of humans versus machines varies dramatically across different domains, revealing the complementary nature of biological and artificial intelligence.

Visual Recognition and Computer Vision 👁️

Computer vision has witnessed perhaps the most dramatic progress in recent years. Models trained on massive datasets like ImageNet have surpassed human-level accuracy in object classification tasks. A well-trained convolutional neural network can distinguish between hundreds of dog breeds with superhuman precision.

However, this advantage diminishes when context matters. Humans effortlessly understand unusual perspectives, occluded objects, and novel scenarios through common sense reasoning. A child recognizes a partially visible teddy bear behind a pillow; many computer vision systems struggle with such contextual inference.

Adversarial examples further expose vulnerabilities in machine vision. Minor pixel modifications imperceptible to humans can cause dramatic misclassifications in neural networks—a phenomenon without clear human analogue.

Natural Language Understanding and Communication

Large language models have revolutionized natural language processing, generating coherent text and answering complex questions. Systems like GPT-4 demonstrate impressive linguistic capabilities, often producing responses indistinguishable from human writing in controlled evaluations.

Yet fundamental limitations persist. These models lack genuine understanding of meaning, operating instead on statistical patterns in training data. They cannot truly comprehend emotions, cultural nuances, or situational appropriateness the way human communicators do naturally.

Sarcasm detection, metaphorical language, and context-dependent interpretation remain challenging for automated systems. Human observers leverage lifetime experience and emotional intelligence—dimensions not captured in training datasets.

The Expertise Factor: Novice vs. Expert Performance

Comparisons between human and machine accuracy must account for expertise levels. A trained radiologist and a medical student examining the same scan represent vastly different benchmarks.

Machine learning models typically achieve performance comparable to trained professionals rather than average individuals. This distinction matters profoundly when evaluating practical deployment scenarios.

Training Data Quality and Human Expert Variation

Models learn from labeled data, often annotated by human experts. The quality and consistency of these annotations directly impact model performance. Interestingly, inter-rater reliability among human experts often reveals significant disagreement even within specialized domains.

Studies in medical imaging frequently show expert radiologists disagreeing on diagnoses in 10-30% of cases. Machine learning models trained on consensus labels may actually represent aggregate expert opinion rather than competing with individual practitioners.

This perspective reframes the comparison: rather than human versus machine, we might consider machine as distilled collective human expertise, optimized for consistency and speed.

Speed, Scale, and Consistency Advantages ⚡

Beyond raw accuracy, practical performance encompasses efficiency dimensions where machines demonstrate clear advantages.

An automated content moderation system can review millions of social media posts daily—impossible for human moderators. Financial fraud detection algorithms process transactions in milliseconds, identifying suspicious patterns across vast networks.

Consistency represents another critical advantage. Human performance varies with fatigue, emotional state, time of day, and recent experiences. Models produce identical outputs given identical inputs, eliminating these variability sources.

However, this consistency can become rigidity. Humans adapt to novel situations and recognize when established rules shouldn’t apply—flexibility that requires explicit programming in automated systems.

Error Patterns: Different Failure Modes

Perhaps more revealing than aggregate accuracy statistics are the qualitative differences in how humans and machines fail.

Human Error Characteristics

Human errors often stem from cognitive biases, attention limitations, and heuristic reasoning shortcuts. Confirmation bias leads observers to interpret ambiguous evidence supporting pre-existing beliefs. Inattentional blindness causes missed observations when attention focuses elsewhere.

These errors generally follow predictable psychological patterns. Understanding these patterns enables systematic error reduction through training, checklist protocols, and decision support tools.

Machine Learning Failure Modes 🔧

Machine learning models fail differently. Adversarial vulnerabilities allow tiny, crafted perturbations to cause dramatic misclassifications. Distribution shift—encountering data statistically different from training examples—severely degrades performance.

Models also exhibit “Clever Hans” effects, learning spurious correlations rather than meaningful relationships. A medical diagnosis model might learn to identify the hospital where images were captured rather than actual pathology, achieving high training accuracy while failing to generalize.

These failure modes require different mitigation strategies than human errors, including robust training techniques, adversarial training, and careful validation on diverse test sets.

Complementary Intelligence: The Hybrid Approach

Increasingly, practitioners recognize that optimal performance emerges from human-machine collaboration rather than competition. Each brings complementary strengths to complex tasks.

In medical diagnosis, radiologists using AI assistance demonstrate superior performance to either alone. The model provides consistent screening and highlights potential abnormalities; the physician contributes contextual interpretation and clinical judgment.

This collaborative paradigm appears across domains: automated translation with human post-editing, algorithmic trading with human oversight, and content moderation combining automated flagging with human review.

Designing Effective Human-AI Collaboration

Successful integration requires careful interface design and clear role delineation. Systems should present confidence levels, highlight uncertain cases for human review, and facilitate efficient decision-making workflows.

Transparency becomes crucial—humans must understand model reasoning to effectively calibrate trust and identify potential errors. Explainable AI techniques that provide interpretable rationales represent important progress toward this goal.

Evaluation Methodology Challenges 📋

Fairly comparing human and machine performance presents significant methodological challenges. Test conditions, task formulation, and evaluation metrics all influence apparent relative performance.

Controlled laboratory tasks may not reflect real-world complexity. A model achieving 95% accuracy on curated test sets might perform poorly in deployment environments with different data distributions, edge cases, and contextual nuances.

Ecological Validity and Real-World Performance

Human observers in natural contexts access rich information sources unavailable to isolated models. A security officer monitoring surveillance footage integrates visual data with contextual knowledge about normal activity patterns, behavioral cues, and situational factors.

Replicating this ecological context in model evaluation remains challenging. Benchmark datasets represent simplified versions of complex real-world scenarios, potentially overestimating automated system capabilities.

Longitudinal studies tracking deployed system performance provide more realistic assessment but require significant resources and introduce confounding variables as both technology and operational contexts evolve.

Ethical Considerations in Accuracy Comparisons ⚖️

The question of whether machines match or exceed human accuracy carries profound ethical implications, particularly in high-stakes domains like healthcare, criminal justice, and employment decisions.

Demonstrating superhuman accuracy in controlled evaluations doesn’t guarantee ethical deployment. Models may perpetuate or amplify biases present in training data, producing discriminatory outcomes even while achieving high overall accuracy.

Accountability questions arise when automated systems make consequential decisions. When a diagnostic algorithm misses a treatable condition, responsibility attribution becomes complex—does fault lie with developers, healthcare providers, or data curators?

Transparency and Trust Building

Public acceptance of automated decision systems depends not merely on accuracy but on trustworthiness, fairness, and comprehensibility. A model might achieve higher accuracy than human decision-makers while generating less public trust if its reasoning remains opaque.

Regulatory frameworks increasingly demand explainability, bias auditing, and human oversight for consequential automated decisions. These requirements reflect recognition that accuracy alone insufficient ensures ethical and socially acceptable deployment.

The Future Landscape of Human-Machine Performance 🚀

As machine learning techniques advance and computational resources expand, model capabilities will continue improving. However, certain human cognitive strengths may prove more durable than initially anticipated.

Common sense reasoning, contextual flexibility, and genuine understanding remain challenging for current approaches. While narrow task performance increasingly favors machines, general intelligence integrating knowledge across domains remains distinctly human.

Future developments may shift focus from competition to augmentation—designing systems that enhance human capabilities rather than replace them. Brain-computer interfaces, cognitive prosthetics, and intelligent assistants represent potential directions for this synergistic approach.

Practical Implications for Organizations and Individuals

Understanding relative human and machine strengths enables better technology adoption decisions. Organizations should deploy automation where consistency, scale, and speed provide clear advantages while retaining human involvement where contextual judgment and flexibility matter most.

Individual professionals should focus on developing skills complementary to machine capabilities: creative problem-solving, empathetic communication, ethical reasoning, and adaptive learning. These distinctly human competencies become increasingly valuable as routine cognitive tasks automate.

Education systems must evolve accordingly, emphasizing critical thinking, collaborative skills, and technological literacy over rote memorization and procedural execution—areas where machines already excel.

Reframing the Question: Collaboration Over Competition

The framing of “human versus machine” may itself be misleading. Rather than viewing artificial intelligence as competitor to human intelligence, we might recognize these as complementary forms of information processing, each with distinct advantages.

Humans bring contextual understanding, ethical judgment, emotional intelligence, and adaptive flexibility. Machines contribute consistency, scalability, speed, and pattern recognition across massive datasets. Optimal outcomes emerge from thoughtful integration of these capabilities.

The most productive question isn’t whether machines surpass humans, but how we can best combine human and artificial intelligence to address complex challenges beyond either’s individual capacity. This collaborative perspective promises more beneficial technological development than competition-focused framing.

As we continue developing and deploying increasingly capable automated systems, maintaining this balanced perspective—recognizing both impressive capabilities and fundamental limitations—will prove essential for realizing beneficial applications while avoiding overconfidence in technological solutions.

Toni Santos is a meteorological researcher and atmospheric data specialist focusing on the study of airflow dynamics, citizen-based weather observation, and the computational models that decode cloud behavior. Through an interdisciplinary and sensor-focused lens, Toni investigates how humanity has captured wind patterns, atmospheric moisture, and climate signals — across landscapes, technologies, and distributed networks. His work is grounded in a fascination with atmosphere not only as phenomenon, but as carrier of environmental information. From airflow pattern capture systems to cloud modeling and distributed sensor networks, Toni uncovers the observational and analytical tools through which communities preserve their relationship with the atmospheric unknown. With a background in weather instrumentation and atmospheric data history, Toni blends sensor analysis with field research to reveal how weather data is used to shape prediction, transmit climate patterns, and encode environmental knowledge. As the creative mind behind dralvynas, Toni curates illustrated atmospheric datasets, speculative airflow studies, and interpretive cloud models that revive the deep methodological ties between weather observation, citizen technology, and data-driven science. His work is a tribute to: The evolving methods of Airflow Pattern Capture Technology The distributed power of Citizen Weather Technology and Networks The predictive modeling of Cloud Interpretation Systems The interconnected infrastructure of Data Logging Networks and Sensors Whether you're a weather historian, atmospheric researcher, or curious observer of environmental data wisdom, Toni invites you to explore the hidden layers of climate knowledge — one sensor, one airflow, one cloud pattern at a time.